I had the opportunity to attend DevFest Nantes in France on October 16th and 17th 2025 with my company.

We had a booth and I also attended a few conferences.

DevFest Nantes 2025 - Outside

The booth

This kind of event mainly serves two goals for my company:

- awareness of the company and the product, for end-users and developers

- hiring people, as we plan to hire quite a lot of people in the next few months in engineering (Product managers, developers, …)

My company had a big booth at the event and a few goodies to give. So people coming to the booth had different purposes:

- just get the goodies and go to the next booth

- were looking for work, and were interested in what we were doing. I have met people interested in Technical Product Managers positions, developers, and data scientists

- end-users who used our product and were interested in meeting us and / or had a few questions

- people looking for a piece of software that could fill their needs, so they met us, but also competitors at the event

- just curious people wondering what we were doing

Because this event is mainly aimed at developers, our team was mostly comprised of people from the Engineering department (including myself), and people from Talent Acquisition and Developer Advocacy.

As it was the first event I did in the “organizer” mode, it was really interesting to meet different people and answer their questions. It brings us closer to “reality” and the customer / end-users use-cases.

It was also a good opportunity to spend some time with colleagues that I do not see often.

Conferences

While at the event, I also attended a few conferences. My notes are below, they are not very structured as I took them during the conferences, but they should get the “core” of the content.

Terramate, l’outil qui fait scaler votre code Terraform

Terramate, l’outil qui fait scaler votre code Terraform by Mathieu HERBERT, in French

- Terraform is used for Infrastructure as Code (IaC) with Kubernetes

- but how does it scale with multiple environments and different teams?

- why Terramate?

- Support for multiple environments (dev, staging, production, …)

- Gitops / traceability

- Change detection

- Terramate to deploy a Terraform stack, a CLI wrapper above Terraform

- demo: code generation with terramate, which has a language more flexible than HCL (Hashicorp Configuration Language)

- a new Workflow: a new resource is added on a development stack, and then easily migrated to production environment

- can also do orchestration without generating any code by importing Terraform code. This is very useful when migrating from an existing Terraform code base

- at Dataiku: Terramate generates the Terraform

plan, and it is added as a comment on a Pull Request. When merging, a new comment is added with the result of theapply. It allows to trace who did the change, and what was changed (gitops / traceability). It implies that Pull Requests are merged sequentially and always up to date with the target branch - drift detection with the production environment (except a few cases): a

planis regularly executed to check the production stack against the production and check that the code is up to date, and update it if necessary - conclusion: Terraform code is easy to import. It requires a bit of work for CI/CD. At Dataiku, the transition was done 1.5 years ago. As it is a stateless tool, it is integrated via CLI to Github Actions (GHA). Currently 400 stacks with 20 people

My take: very good talk from a colleague, simple and direct to the point on showing the power of Terramate.

An intro to eBPF with Go: The Foundation of Modern Kubernetes Networking

- telemetry is not enough to fully understand the application at the kernel level

- careful with the kernels: syscalls, maps, open descriptors, …

- eBPF acts safely inside the kernel without changing it

- it attaches code to events in user space: trace points, kprobes, …

- the program is safe / approved

- use case with eBPF maps:

- kernel to user-space

- user-space to kernel

- kernel to kernel

- using go to create the eBPF program with cilium, because it is a cloud native language

- demo of:

- a C program to trace open files

- translate to go code

- attach the eBPF program to the kernel

- use sudo to run the program

- moral 1: no recompilation

- XDP capabilities to attach eBPF hooks to the network path. For example, it can block a packet at several levels and even before it can reach the kernel

- moral 2: eBPF at the kernel edge

- in Kubernetes, eBPF code can be put on the node where pods are running, that way it can act as probes and logs to events for the whole pods

- Cilium is used everywhere and is Open Source, it has gone from a tool to ecosystem in networking to an ecosystem in security

- moral 3: free profits to everyone

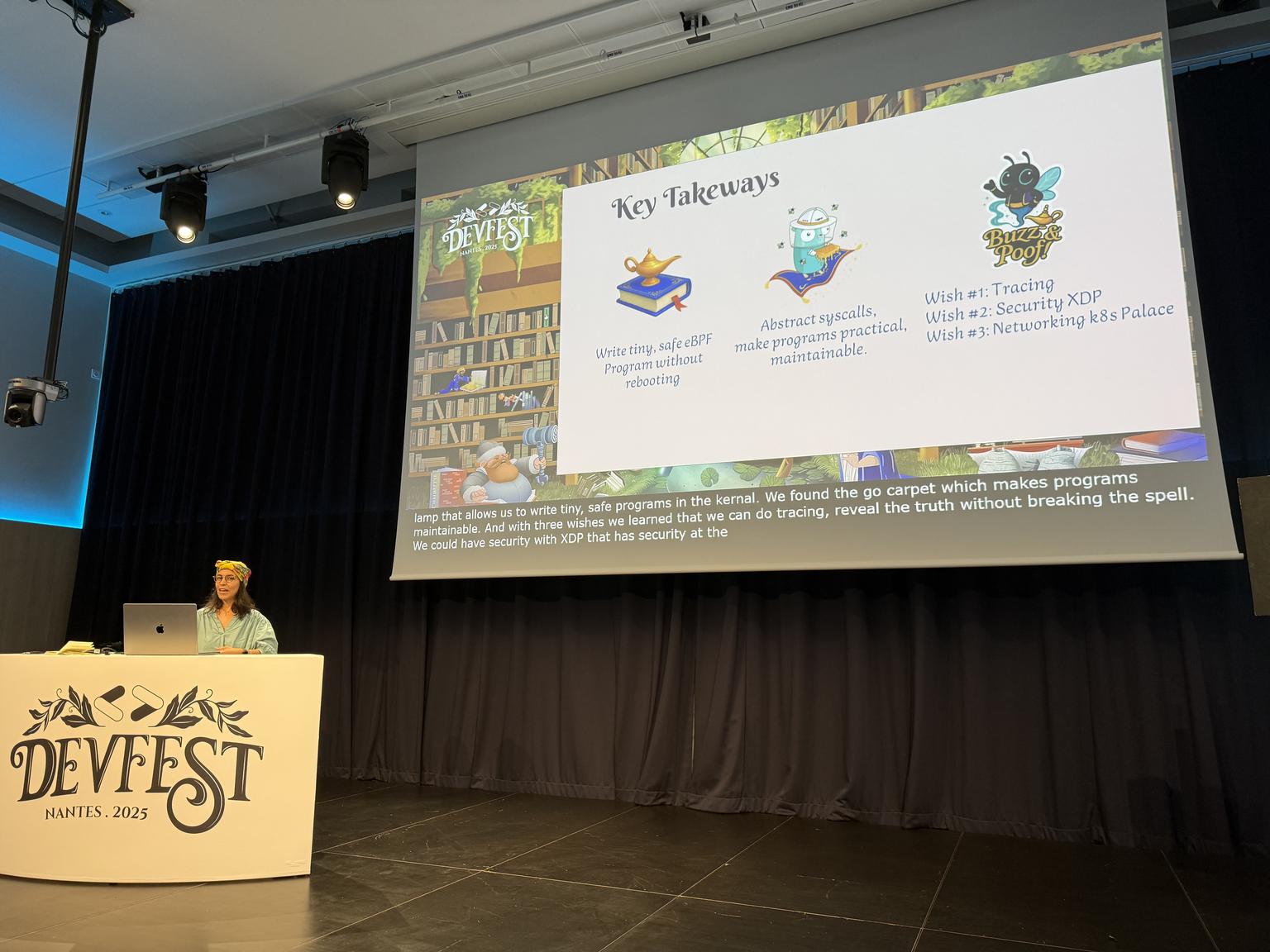

- key takeaways:

- write tiny, safe eBPF program without rebooting

- abstract syscalls, make programs practical, maintainable

- wish 1: tracing, wish 2: security XDP, wish 3: networking K8s palace

Note: it seems there is no easy way to find if a program is (or was) being tampered with eBPF.

My take: the talk was a high-level overview of eBPF, it was very nice. Its main problem is that it was a bit cluttered with a side story around Aladdin and the genie. The talk would have been clearer and could have gone deeper into a few details without that.

Limits, Requests, QoS, PriorityClasses, on balaie ce que vous pensiez savoir sur le scheduling dans Kubernetes

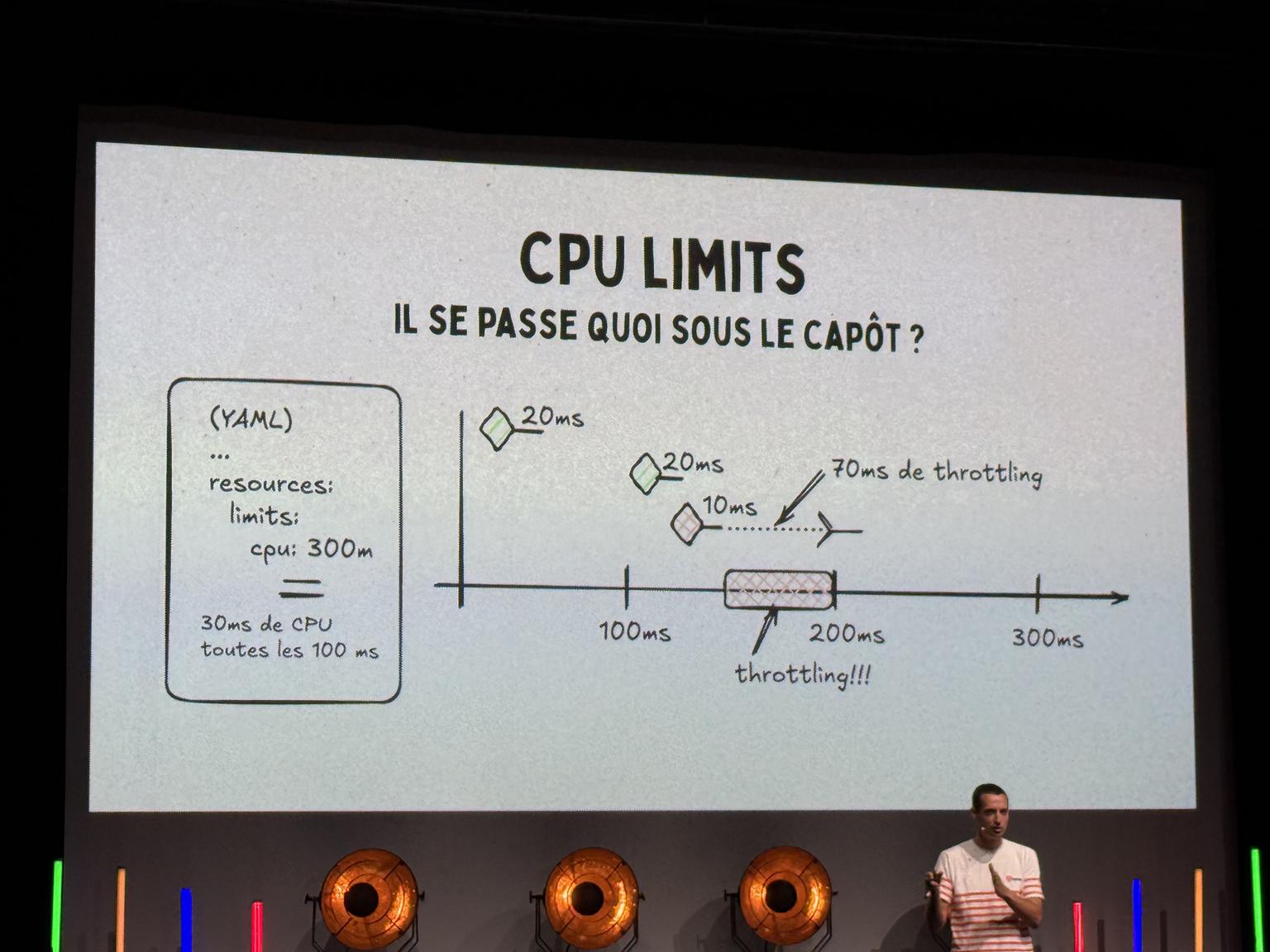

limits: constraint on a resource that the pod cannot go beyondrequests: what the pod asks to the Kubernetes scheduler There are:- compressible resources: shared CPU, …

- incompressible resources: RAM, disk, …

Limits

- demo with a limit on the pod at 10% and the other one at 100%: the performance of the program with the CPU at 10% leads to latency spikes.

- overall, it is a bad idea to limit the CPU on a pod as it is done via Cgroups.

- example load testing with K6

- due to how Kubernetes schedules the CPU, latency is artificially added to each request if the scheduler throttles the process due to the CPU limit

Requests

- memory: requests and scheduling

- Kubernetes needs requests to know on which node to place which pod

- tool: kwok to simulate lots of pods and kubelets to load test scheduling in Kubernetes

- using too small requests have no use, they need to match the real application usage

- requests for CPU are used for CPU share: an application can have different performance profiles depending on which share it has

QOS

- K3S is a distribution to deploy light clusters, for example on the edge

- a pod can be evicted depending on its priority

- demo with different priorities:

- best effort, guaranteed and burstable

- connect to the pod and use

stress-ngto diminish memory - result: pods will be killed in order: best effort, burstable, guaranteed. They will be marked as

evicted

- what happens when the cluster has lots of non-priority workloads and a priority one comes in?

PriorityClasses

- the higher the value, the higher the priority

- Kubernetes will remove pods with lower priority to make room for the one with high priority. They will be marked as

preempted - sometimes limits have to be really big for QoS

- otherwise the cluster will need to be scaled (survival mode)

Takeaways

- no CPU

limits, but there may be exceptions requestsandlimitsmatching the reality everywhereQOS: allow survival in case of unexpected loadPriorityClasses: ensure deployment of critical applications On load testing: nothing will replace testing in production, we can match it after having some real world data, but there is no magic.

My take: a nice overview of some Kubernetes concepts with live demos.

Il était une Faille: Construire la Résilience dans un Monde Chaotique

There are new types of failures due to microservices:

- network

- data consistency

- inter-service dependencies

Network

- physical layer

- DNS

- latency spikes

- packet loss

- network partitions

Sometimes, the source of an error is hard to troubleshoot.

Example:

- dns error

- fix: http timeout, fix in network routes

Thundering herd problem

- timeouts

- retries

- circuit breaker

Example:

- a service used on the side was removed manually by mistake

- by removing it, its DNS entry was also removed

- all services started spamming the DNS, requests went from 15k/s to 120k/s

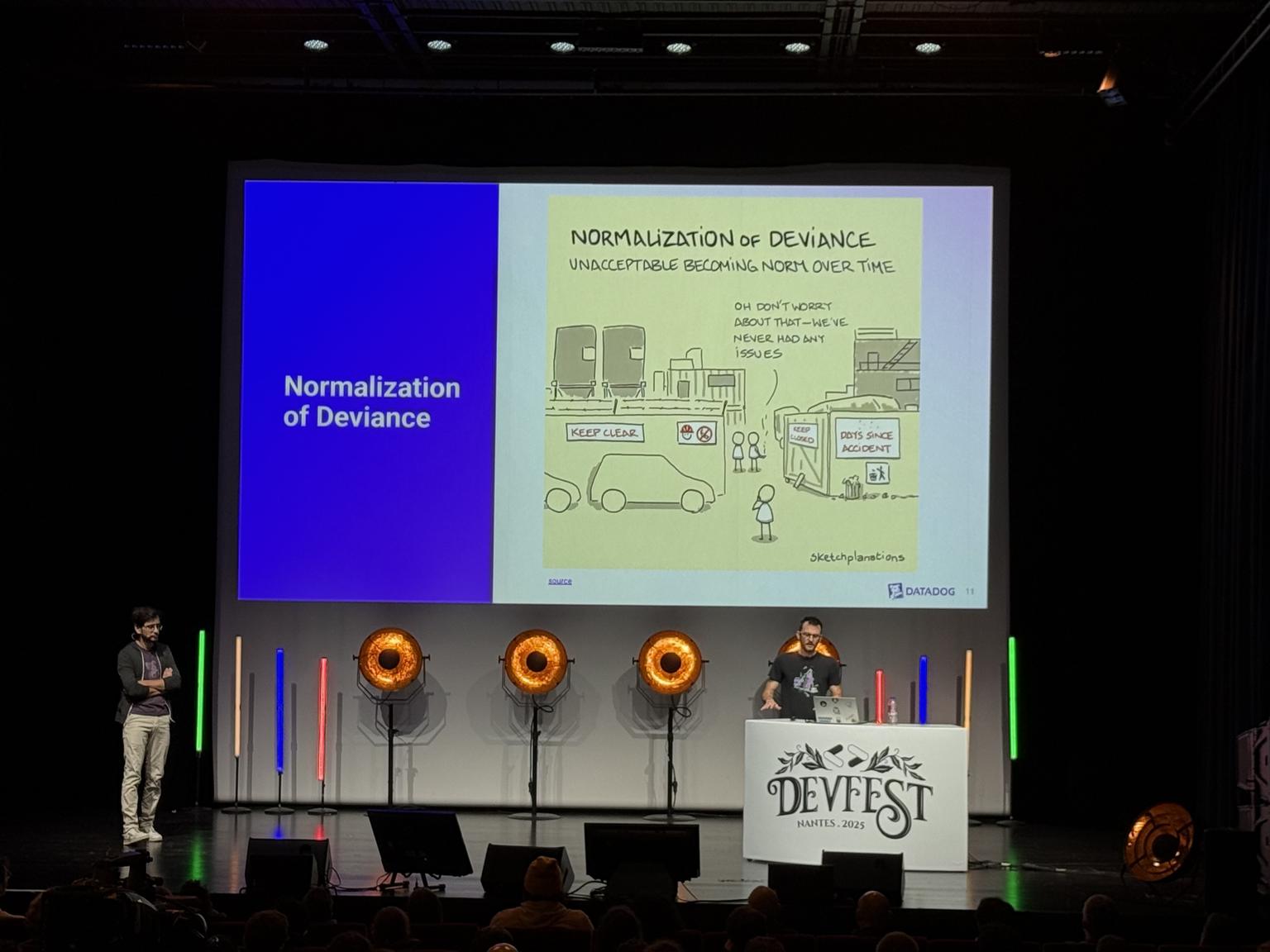

Normalization of deviance: bad habits with production problems, it becomes “business as usual”, and nobody wants to modify it.

Availability zones

- in theory, independent within a region

- example of a round robin: app A in Zone1 calls B in Zone2, and app A in Zone2 calls B in Zone1

- grey failures: not visible quickly, but will start to pile up (packet loss hard to identify for example)

- circuit breaker pattern could have helped in theory, but when the problem is intermittent, it does not work well

At Datadog: manual check, verification with the cloud provider, and then deactivate the Zone to redirect all traffic to the other zone

Control and data plane separation

Isolate the two to avoid impacts:

- resilience to exhaustion

- clear failure boundaries

- scalability and flexibility

- operational reliability

- security and governance

Example: OpenAI deployed Telemetry on a service. Everything went well in staging, and when it was deployed to production, the number of requests made the Control Plane go down.

At Datadog: one cluster / Availability Zone to reduce the blast radius to a cluster if there are problems. That way other zones are not impacted

Isolation-cells

Cells are mini infrastructures:

- bulkhead pattern

- isolate consumers and services from cascading failures

- preservation of partial functionalities

- scalable and independent growth

Multi-regions

All regions are not isolated in practice, see Facebook and Google problems (quota).

At Datadog: all regions are independent: no network calls from one region to another, and progressive deployment region by region. It did not prevent errors / mistakes.

Example: 50% of the hosts went down due to a security update at the same time that took down everything

Chaos engineering

- reality != assumptions

- failures are inevitable

- expose hidden dependencies

- validate resilience mechanisms

- build organizational muscle memories

SLI and SLO

See https://cloud.google.com/blog/products/devops-sre/sre-fundamentals-slis-slas-and-slos

- clarity of expectations

- prioritization of work

- trust between teams

- early warning signals

- supports incident management

SLO is a contract between services to know what is doable.

SLI is a signal on incidents.

PRRs and SRR

Production Readiness Review (PRR):

- increase launch safety

- improve operational excellence

- checklist on all new services

System Risk Reviews (SRR):

- identify top risks and owners

- plan remediation

- brainstorming on what can fail / what is wrong, but nobody is working on

Organization

- incident management:

- define roles

- document runbooks

- training

- incidents are inevitable: who manages it? What can I do to help?

- blameless culture

- focus on learning

- build trust and stronger teams

Elasticity Myth

Limited capacity under high demand:

- scaling and recovery failures

- blocked updates

Instance mixes:

- performance variability: careful with round robin

- complexity in Capacity Management

Example: if an Availability Zone goes down, 33% of the traffic is redirected to other nodes, tens of thousands of nodes can be required at the same time

Conclusion

- incidents happen

- challenge your system

- be prepared to handle unexpected incidents

- resilience comes at a cost

- align with business expectations

- evolve. Do not overbuild

Resilience is built incrementally, little by little

My take: a very good talk, with lots of examples on failures at Datadog and what they did to fix it. It had lots of Technical and Organizational suggestions, as problems have different causes.

Conclusion

DevFest Nantes 2025 - Inside

I had a lot of fun coming to DevFest, answering questions, attending conferences and getting a few goodies.

I also had the opportunity to inquire about some business prospects and a few technical questions.

Moreover, it was a good time spent with a few colleagues, and I could discover Nantes a little bit.

A good experience to try again in the future!